C/C++的内存泄漏检测东西Valgrind memcheck的利用经验

副标题#e#

Linux下的Valgrind真是利器啊(不知道Valgrind的请自觉查察参考文献(1)(2)),帮我找出了不少C++中的内存打点错误,前一阵子还在纠结为什么VS 2013下运行精采的措施到了Linux下用g++编译运行却瓦解了,给出一堆汇编代码也看不懂。久久不得解事后,想想必定是内存方面的错误,VS在这方面一般都不查抄的,就算你的措施千疮百孔,各类内存泄露、内存打点错误,只要不影响运行,没有读到不应读的对象VS就不会汇报你(应该是VS内部没实现这个内存检测成果),因此用VS写出的措施大概不是完美或结实的。

更新:感激博客园好意网友@shines77的热心推荐,即VS中有内存泄漏检测东西插件VLD(Visual Leak Detector),需要下载安装,安装要领请看官方先容,利用很是简朴,在第一个进口文件里加上#include <vld.h>就可以了,检测陈诉在输出窗口中。我安装利用了下,不知道是安装错误照旧什么,无论措施有无内存泄露,输出都是“No memory leaks detected.”

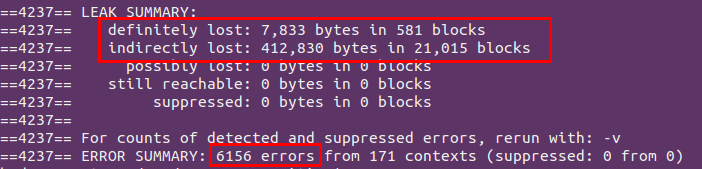

下面是我通过 Valgrind第一次检测获得的功效和一点点修改后获得的功效(还没改完,所以尚有不少内存泄露问题……):

第一次检测功效:惨不忍睹,因为措施局限有些大。

按照提示一点点修改事后,固然尚有个体错误和内存泄露问题,但还在修改中,至少已经能乐成运行了……

真感激Valgrind帮我乐成找出了一堆内存问题,查找进程中也为本身犯的初级错误而感想羞愧,所以记录下来以便服膺。

1. 最多最初级的错误:不匹配地利用malloc/new/new[] 和 free/delete/delete[]

这样的错误主要源于我对C++的new/new[]、delete/delete[]机制不熟悉,每每new/new[]分派内存的范例变量我一概用delete举办释放,可能有的变量用malloc举办分派,功效释放的时候却用delete,导致申请、释放许多处所不匹配,许多内存空间没能释放掉。为了维护利便,我厥后一律利用new/new[]和delete/delete[],丢弃C中的malloc和free。

假如将用户new的范例分为根基数据范例和自界说数据范例两种,那么对付下面的操纵相信各人都很熟悉,也没有任何问题。

(1)根基数据范例

一维指针:

// 申请空间

int *d = new int[5];

// 释放空间

delete[] d;

二维指针:

// 申请空间

int **d = new int*[5];

for (int i = 0; i < 5; i++)

d[i] = new int[10];

// 释放空间

for (int i = 0; i < 5; i++)

delete[] d[i];

delete[] d;

#p#副标题#e#

(2)自界说数据范例

好比下面这样一个范例:

class DFA {

bool is_mark;

char *s;

public:

~DFA() { printf("delete it.n"); }

};

一维指针:

DFA *d = new DFA();

delete d;

二维指针:

// 申请空间

DFA **d = new DFA*[5];

for (int i = 0; i < 5; i++)

d[i] = new DFA();

// 释放空间

for (int i = 0; i < 5; i++)

delete d[i];

delete[]d;

这没有任何问题,因为我们都是配套利用new/delete和new[]/delete[]的。这在Valgrind下检测也是完美通过的,但为什么要这配套利用呢?道理是什么?

固然深究这些对象仿佛没什么实际意义,但对付想深入相识C++内部机制或像我一样总是释放堕落导致大量内存泄露的小白措施员照旧值得研究的,至少知道了为什么,今后就不会犯此刻的初级错误。

参考文献(3)是这样描写的:

凡是状况下,编译器在new的时候会返回用户申请的内存空间巨细,可是实际上,编译器会分派更大的空间,目标就是在delete的时候可以或许精确的释放这段空间。

这段空间在用户取得的指针之前以及用户空间末端之后存放。

实际上:blockSize = sizeof(_CrtMemBlockHeader) + nSize + nNoMansLandSize; 个中,blockSize 是系统所分派的实际空间巨细,_CrtMemBlockHeader是new的头部信息,个中包括用户申请的空间巨细等其他一些信息。 nNoMansLandSize是尾部的越界校验巨细,一般是4个字节“FEFEFEFE”,假如用户越界写入这段空间,则校验的时候会assert。nSize才是为我们分派的真正可用的内存空间。

用户new的时候分为两种环境

A. new的是基本数据范例可能是没有自界说析构函数的布局

B. new的是有自界说析构函数的布局体或类

这两者的区别是假如有用户自界说的析构函数,则delete的时候必需要挪用析构函数,那么编译器delete时如何知道要挪用几多个工具的析构函数呢,谜底就是new的时候,假如是环境B,则编译器会在new头部之后,用户得到的指针之前多分派4个字节的空间用来记录new的时候的数组巨细,这样delete的时候就可以取到个数并正确的挪用。

#p#分页标题#e#

这段描写大概有些艰涩难解,参考文献(4)给了越发具体的表明,一点即通。这样的表明其实也隐含着一个推论:假如new的是根基数据范例可能是没有自界说析构函数的布局,那么这种环境下编译器不会在用户得到的指针之前多分派4个字节,因为这时候delete时不消挪用析构函数,也就是不消知道数组个数的巨细(因为只有挪用析构函数时才需要知道要挪用几多个析构函数,也就是数组的巨细),而是直接传入数组的起始地点从而释放掉这块内存空间,此时delete与delete[]是等价的。

因此下面的释放操纵也是正确的:

// 申请空间

int *d = new int[5];

// 释放空间

delete d;

将其放在Valgrind下举办检测,功效如下:

==2955== Memcheck, a memory error detector ==2955== Copyright (C) 2002-2012, and GNU GPL'd, by Julian Seward et al. ==2955== Using Valgrind-3.8.1 and LibVEX; rerun with -h for copyright info ==2955== Command: ./test_int ==2955== ==2955== Mismatched free() / delete / delete [] ==2955== at 0x402ACFC: operator delete(void*) (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==2955== by 0x8048530: main (in /home/hadoop/test/test_int) ==2955== Address 0x434a028 is 0 bytes inside a block of size 20 alloc'd ==2955== at 0x402B774: operator new[](unsigned int) (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==2955== by 0x8048520: main (in /home/hadoop/test/test_int) ==2955== ==2955== ==2955== HEAP SUMMARY: ==2955== in use at exit: 0 bytes in 0 blocks ==2955== total heap usage: 1 allocs, 1 frees, 20 bytes allocated ==2955== ==2955== All heap blocks were freed -- no leaks are possible ==2955== ==2955== For counts of detected and suppressed errors, rerun with: -v ==2955== ERROR SUMMARY: 1 errors from 1 contexts (suppressed: 0 from 0)

首先从“All heap blocks were freed — no leaks are possible”可以看出上面的释放操纵简直是正确的,而不是有些人认为的delete d;只会释放d[]的第一个元素的空间,后头的都不会获得释放。可是从“Mismatched free() / delete / delete []”知道Valgrind实际上是不答允这样操纵的,固然没有内存泄露问题,可是new[]与delete不匹配,这样的编程气势气魄不经意间就容易犯初级错误,所以Valgrind报错了,可是我想Valgrind内部实现应该不会思量的这么巨大,它就查抄new是否与delete配对,new[]是否与delete[]配对,而不管有时候new[]与delete配对也不会呈现问题的。

综上所述,给我的履历就是:在某些环境下,new[]分派的内存用delete不会堕落,可是大多环境下会发生严重的内存问题,所以必然要养成将new和delete,new[]和delete[]配套利用的精采编程习惯。

2. 最看不懂的错误:一堆看不懂的Invalid read/write错误(更新:已办理)

好比下面这样一个措施:

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

struct accept_pair {

bool is_accept_state;

bool is_strict_end;

char app_name[0];

};

int main() {

char *s = "Alexia";

accept_pair *ap = (accept_pair*)malloc(sizeof(accept_pair) + sizeof(s));

strcpy(ap->app_name, s);

printf("app name: %s\n", ap->app_name);

free(ap);

return 0;

}

首先对该措施做个简要的说明:

这里布局体里界说零长数组的原因在于我的需求:我在其它处所要用到很大的accept_pair数组,个中只有个体accept_pair元素中的app_name是有效的(取决于某些值的判定,假如为true才给app_name赋值,假如为false则app_name无意义,为空),因此若是char app_name[20],那么大部门accept_pair元素都挥霍了这20个字节的空间,所以我在这里先一个字节都不分派,到时谁需要就给谁分派,遵循“按需分派”的陈腐思想。大概有人会想,用char *app_name也可以啊,同样能实现按需分派,是的,只是多4个字节罢了,属于替补要领。

在g++下颠末测试,没有什么问题,可以或许正确运行,但用Valgrind检测时却报出了一些错误,不是内存泄露问题,而是内存读写错误:

==3511== Memcheck, a memory error detector ==3511== Copyright (C) 2002-2012, and GNU GPL'd, by Julian Seward et al. ==3511== Using Valgrind-3.8.1 and LibVEX; rerun with -h for copyright info ==3511== Command: ./zero ==3511== ==3511== Invalid write of size 1 ==3511== at 0x402CD8B: strcpy (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484E3: main (in /home/hadoop/test/zero) ==3511== Address 0x420002e is 0 bytes after a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== ==3511== Invalid write of size 1 ==3511== at 0x402CDA5: strcpy (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484E3: main (in /home/hadoop/test/zero) ==3511== Address 0x4200030 is 2 bytes after a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== ==3511== Invalid read of size 1 ==3511== at 0x40936A5: vfprintf (vfprintf.c:1655) ==3511== by 0x409881E: printf (printf.c:34) ==3511== by 0x4063934: (below main) (libc-start.c:260) ==3511== Address 0x420002e is 0 bytes after a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== ==3511== Invalid read of size 1 ==3511== at 0x40BC3C0: [email protected]@GLIBC_2.1 (fileops.c:1311) ==3511== by 0x4092184: vfprintf (vfprintf.c:1655) ==3511== by 0x409881E: printf (printf.c:34) ==3511== by 0x4063934: (below main) (libc-start.c:260) ==3511== Address 0x420002f is 1 bytes after a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== ==3511== Invalid read of size 1 ==3511== at 0x40BC3D7: [email protected]@GLIBC_2.1 (fileops.c:1311) ==3511== by 0x4092184: vfprintf (vfprintf.c:1655) ==3511== by 0x409881E: printf (printf.c:34) ==3511== by 0x4063934: (below main) (libc-start.c:260) ==3511== Address 0x420002e is 0 bytes after a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== ==3511== Invalid read of size 4 ==3511== at 0x40C999C: __GI_mempcpy (mempcpy.S:59) ==3511== by 0x40BC310: [email protected]@GLIBC_2.1 (fileops.c:1329) ==3511== by 0x4092184: vfprintf (vfprintf.c:1655) ==3511== by 0x409881E: printf (printf.c:34) ==3511== by 0x4063934: (below main) (libc-start.c:260) ==3511== Address 0x420002c is 4 bytes inside a block of size 6 alloc'd ==3511== at 0x402C418: malloc (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so) ==3511== by 0x80484C8: main (in /home/hadoop/test/zero) ==3511== app name: Alexia ==3511== ==3511== HEAP SUMMARY: ==3511== in use at exit: 0 bytes in 0 blocks ==3511== total heap usage: 1 allocs, 1 frees, 6 bytes allocated ==3511== ==3511== All heap blocks were freed -- no leaks are possible ==3511== ==3511== For counts of detected and suppressed errors, rerun with: -v ==3511== ERROR SUMMARY: 9 errors from 6 contexts (suppressed: 0 from 0)

从检测陈诉可以看出:

#p#分页标题#e#

strcpy(ap->app_name, s);这句是内存写错误,printf("app name: %s\n", ap->app_name);这句是内存读错误,两者都说明Valgrind认为ap->app_name所处内存空间是不正当的,但是我显着已经为其分派了内存空间,只是没有注明这段空间就是给它用的,莫非布局体中零长数组char app_name[0]是不能写入值的吗?照旧我对零长数组的利用有误?至今仍不得解,求大神解答……

更新:感谢博客园网友@shines77的好意指正,这里犯了个超等初级的错误,就是忘了main中s是char*的,因此sizeof(s)=4或8(64位机),因此accept_pair *ap = (accept_pair*)malloc(sizeof(accept_pair) + sizeof(s));这句并没有为app_name申请足够的空间,虽然就会呈现Invalid read/write了。这个初级错误真是。。。厥后想了下,是本身在项目中直接拷贝过来的这句,项目中的s不是char*的,拷贝过来忘了改成accept_pair *ap = (accept_pair*)malloc(sizeof(accept_pair) + strlen(s) + 1);了,今后照旧细心的好,真是挥霍本身时间也挥霍各人时间了。

3. 最不明所以的内存泄露:definitely lost/indefinitely lost(更新:已办理)

请看下面这样一个措施:

#include <stdio.h>

#include <string.h>

class accept_pair {

public:

bool is_accept_state;

bool is_strict_end;

char *app_name;

public:

accept_pair(bool is_accept = false, bool is_end = false);

~accept_pair();

};

class DFA {

public:

unsigned int _size;

accept_pair **accept_states;

public:

DFA(int size);

~DFA();

void add_state(int index, char *s);

void add_size(int size);

};

int main() {

char *s = "Alexia";

DFA *dfa = new DFA(3);

dfa->add_state(0, s);

dfa->add_state(1, s);

dfa->add_state(2, s);

dfa->add_size(2);

dfa->add_state(3, s);

dfa->add_state(4, s);

printf("\napp_name: %s\n", dfa->accept_states[4]->app_name);

printf("size: %d\n\n", dfa->_size);

delete dfa;

return 0;

}

accept_pair::accept_pair(bool is_accept, bool is_end) {

is_accept_state = is_accept;

is_strict_end = is_end;

app_name = NULL;

}

accept_pair::~accept_pair() {

if (app_name) {

printf("delete accept_pair.\n");

delete[] app_name;

}

}

DFA::DFA(int size) {

_size = size;

accept_states = new accept_pair*[_size];

for (int s = 0; s < _size; s++) {

accept_states[s] = NULL;

}

}

DFA::~DFA() {

for (int i = 0; i < _size; i++) {

if (accept_states[i]) {

printf("delete dfa.\n");

delete accept_states[i];

accept_states[i] = NULL;

}

}

delete[] accept_states;

}

void DFA::add_state(int index, char *s) {

accept_states[index] = new accept_pair(true, true);

accept_states[index]->app_name = new char[strlen(s) + 1];

memcpy(accept_states[index]->app_name, s, strlen(s) + 1);

}

void DFA::add_size(int size) {

// reallocate memory for accept_states.

accept_pair **tmp_states = new accept_pair*[size + _size];

for (int s = 0; s < size + _size; s++)

tmp_states[s] = new accept_pair(false, false);

for (int s = 0; s < _size; s++) {

tmp_states[s]->is_accept_state = accept_states[s]->is_accept_state;

tmp_states[s]->is_strict_end = accept_states[s]->is_strict_end;

if (accept_states[s]->app_name != NULL) {

tmp_states[s]->app_name = new char[strlen(accept_states[s]->app_name) + 1];

memcpy(tmp_states[s]->app_name, accept_states[s]->app_name, strlen(accept_states[s]->app_name) + 1);

}

}

// free old memory.

for (int s = 0; s < _size; s++) {

if (accept_states[s] != NULL) {

delete accept_states[s];

accept_states[s] = NULL;

}

}

_size += size;

delete []accept_states;

accept_states = tmp_states;

}

#p#分页标题#e#

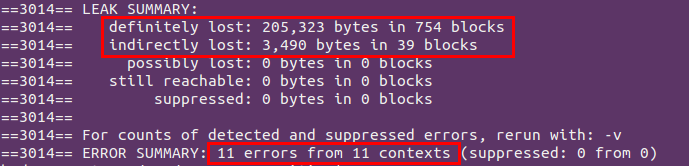

固然有点长,但逻辑很简朴,个中add_size()首先分派一个更大的accept_pair数组,将已有的数据全部拷贝进去,然后释放掉本来的accept_pair数组所占空间,最后将旧的数组指针指向新分派的内存空间。这是个demo措施,在我看来这段措施是没有任何内存泄露问题的,因为申请的所有内存空间最后城市在DFA析构函数中获得释放。可是Valgrind的检测陈诉却报出了1个内存泄露问题(赤色的是措施输出):

==3093== Memcheck, a memory error detector

==3093== Copyright (C) 2002-2012, and GNU GPL'd, by Julian Seward et al.

==3093== Using Valgrind-3.8.1 and LibVEX; rerun with -h for copyright info

==3093== Command: ./test

==3093==

delete accept_pair.

delete accept_pair.

delete accept_pair.

app_name: Alexia

size: 5

delete dfa.

delete accept_pair.

delete dfa.

delete accept_pair.

delete dfa.

delete accept_pair.

delete dfa.

delete accept_pair.

delete dfa.

delete accept_pair.

==3093==

==3093== HEAP SUMMARY:

==3093== in use at exit: 16 bytes in 2 blocks

==3093== total heap usage: 21 allocs, 19 frees, 176 bytes allocated

==3093==

==3093== 16 bytes in 2 blocks are definitely lost in loss record 1 of 1

==3093== at 0x402BE94: operator new(unsigned int) (in /usr/lib/valgrind/vgpreload_memcheck-x86-linux.so)

==3093== by 0x8048A71: DFA::add_size(int) (in /home/hadoop/test/test)

==3093== by 0x8048798: main (in /home/hadoop/test/test)

==3093==

==3093== LEAK SUMMARY:

==3093== definitely lost: 16 bytes in 2 blocks

==3093== indirectly lost: 0 bytes in 0 blocks

==3093== possibly lost: 0 bytes in 0 blocks

==3093== still reachable: 0 bytes in 0 blocks

==3093== suppressed: 0 bytes in 0 blocks

==3093==

==3093== For counts of detected and suppressed errors, rerun with: -v

==3093== ERROR SUMMARY: 1 errors from 1 contexts (suppressed: 0 from 0)

说明add_size()这个函数里存在用new申请的内存空间没有获得释放,这一点感受很费解,开始觉得tmp_states指针所指向的数据赋给accept_states后没有实时释放导致的,于是我最后加了句delete tmp_states;功效招致更多的错误。相信不是Valgrind误报,说明我对C++的new和delete机制照旧不明不白,一些于我而言不明所以的内存泄露问题真心不得解,但愿有人可以或许汇报我是那边的问题?

——————————————————————————————————————————

更新:感谢博客园好意网友@NewClear的解惑。这里简直有泄露问题,下面是他的解答:

第3个问题,是有两个泄露

DFA::add_state内里直接

accept_states[index] = new accept_pair(true, true);

假如本来的accept_states[index]不为NULL就泄露了

而在DFA::add_size内里,

for (int s = 0; s < size + _size; s++)

tmp_states[s] = new accept_pair(false, false);

对新分派的tmp_states的每一个元素都new了一个新的accept_pair

所以在main函数内里dfa->add_size(2);今后,总共有5个成员,并且5个都不为NULL

之后

dfa->add_state(3, s);

dfa->add_state(4, s);

功效就导致了index为3和4的原先的工具泄露了

你的系统是32位的,所以一个accept_pair巨细是8byte,两个工具就是16byte

#p#分页标题#e#

办理方案也很简朴,修改add_size函数,从头申请空间时仅为已有的accept_pair数据申请空间,其它的初始化为NULL,这样在需要时才在add_state内里申请空间,也就是修改add_size函数如下:

void DFA::add_size(int size) {

// reallocate memory for accept_states.

accept_pair **tmp_states = new accept_pair*[size + _size];

for (int s = 0; s < size + _size; s++)

tmp_states[s] = NULL;

for (int s = 0; s < _size; s++) {

tmp_states[s] = new accept_pair(false, false);

tmp_states[s]->is_accept_state = accept_states[s]->is_accept_state;

tmp_states[s]->is_strict_end = accept_states[s]->is_strict_end;

if (accept_states[s]->app_name != NULL) {

tmp_states[s]->app_name = new char[strlen(accept_states[s]->app_name) + 1];

memcpy(tmp_states[s]->app_name, accept_states[s]->app_name, strlen(accept_states[s]->app_name) + 1);

}

}

// free old memory.

for (int s = 0; s < _size; s++) {

if (accept_states[s] != NULL) {

delete accept_states[s];

accept_states[s] = NULL;

}

}

_size += size;

delete[]accept_states;

accept_states = tmp_states;

}

作者:cnblogs Alexia(minmin)